Image Mirroring

When Qovery is running on your infrastructure, it requires an image registry on your cloud account to store the images built via the Qovery CI or to mirror the images deployed from a 3rd party container registry.

This process allows to: 1. speed up the deployment process by avoiding to re-build every time the application image from scratch 2. speed up and secure the scale up operation of your application since it doesn't need to pull the image from the source registry every time a new instance of the application is needed.

This mirroring registry is available and configurable within the Qovery interface within the Mirroring registry section of your cluster.

How does it work

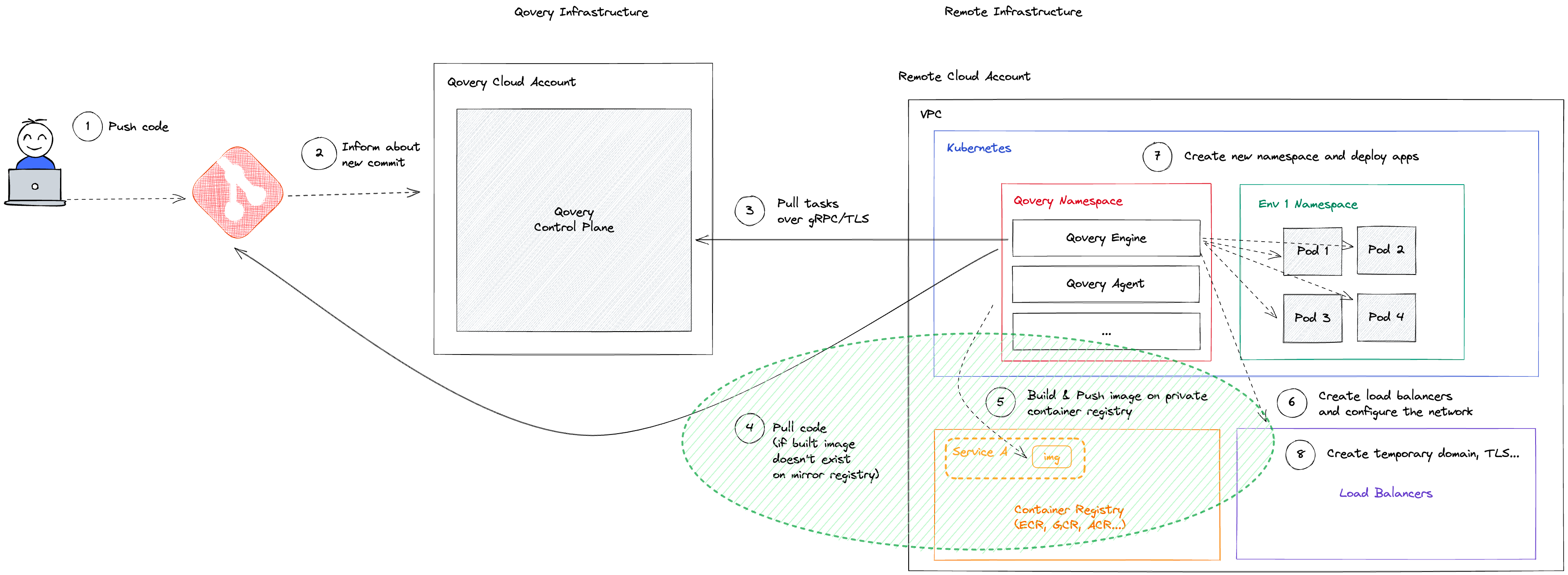

Every time an application needs to be deployed on your cluster, the deployed image is picked from the mirroring registry. How the image is pushed on the mirroring registry it depends if you build the application with the Qovery CI or not.

Application built via the Qovery CI

At the end of the build process, the Qovery CI push the generated image into your mirroring registry. The images pushed here are organized by "Git repository", meaning that the image built on services having the same git repsitory will be pushed in the same repository, named z<short_cluster_id>-git_repo_name (or namespace, depending on the cloud provider).

The tag assigned to the built image is based on the following elements (build context): commit ID, repository root path, Dockerfile path, Dockerfile content, and ARGS environment variables (present in the dockerfile). This ensures that each service's build and mirroring process is completely isolated from others.

Before building the application A1, Qovery checks within mirroring registry at the repository of the application A1 if an image has already being built with the build context parameters (commit id, repository root path, dockerfile path, dockerfile content and environment variables) within the same cluster.

If the image already exists, the build is skipped and Qovery starts the deployment of that image on the Kubernetes cluster.

Otherwise, the image is built by the Qovery pipeline the resulting image is pushed on the mirroring registry at the repository of the application A1.

Once an application is deleted, if no other application is using the same image name and tag, the image is deleted from the mirroring registry. If the image is still used by another application, it will not be deleted until the lifecycle TTL is reached (see [this section][#image-retention-policy]).

In order to speed up the image build, we are using the mirroring registry as a remote cache (available in AWS, GCP and Scaleway). It will avoid building the image from scratch, only the layers that changed will be built.

Check out the best practices section below to know some best practices you can follow to ensure you benefit from all the caching system and reduce the build time.

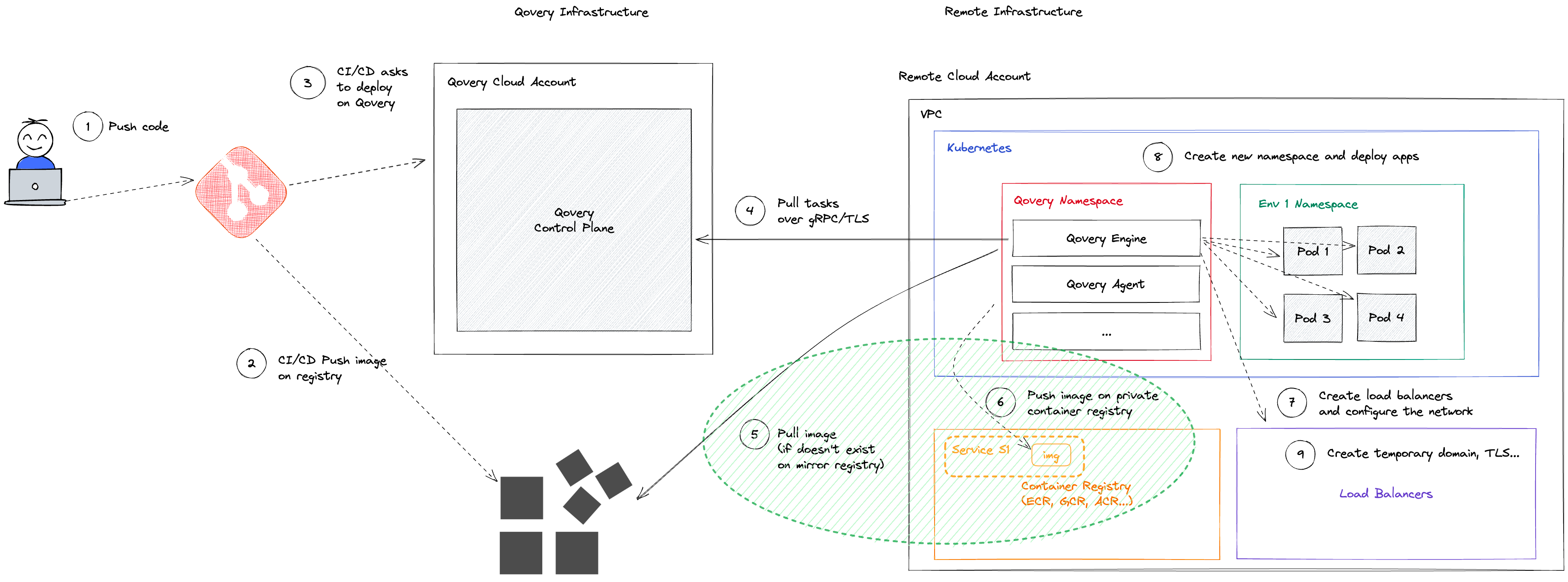

Application deployed from a container registry

The Qovery behaviour in this case will depend on the chosen mirroring mode within the cluster advanced settings.

Service (Default)

Images within the mirroring registry are organized by "Qovery service", each service has its own repository (or namespace, naming depends on the cloud provider). This means that each service mirroring process is completely isolated from the others.

At the beginning of the deployment of the application A1, Qovery checks within mirroring registry at the repository of the application A1 if an image with the same image name and tag exists.

If the image already exists, the mirroring process is skipped and Qovery starts the deployment of that image on the Kubernetes cluster.

Otherwise, the image is pulled from the source registry and pushed on the mirroring registry at the repository of the application A1, deleting any previous image.

Pro:

- Images are automatically deleted when not needed anymore

Cons:

- If the same image is used across environments or service, Qovery will mirror multiple time the same image, reducing the deployment speed

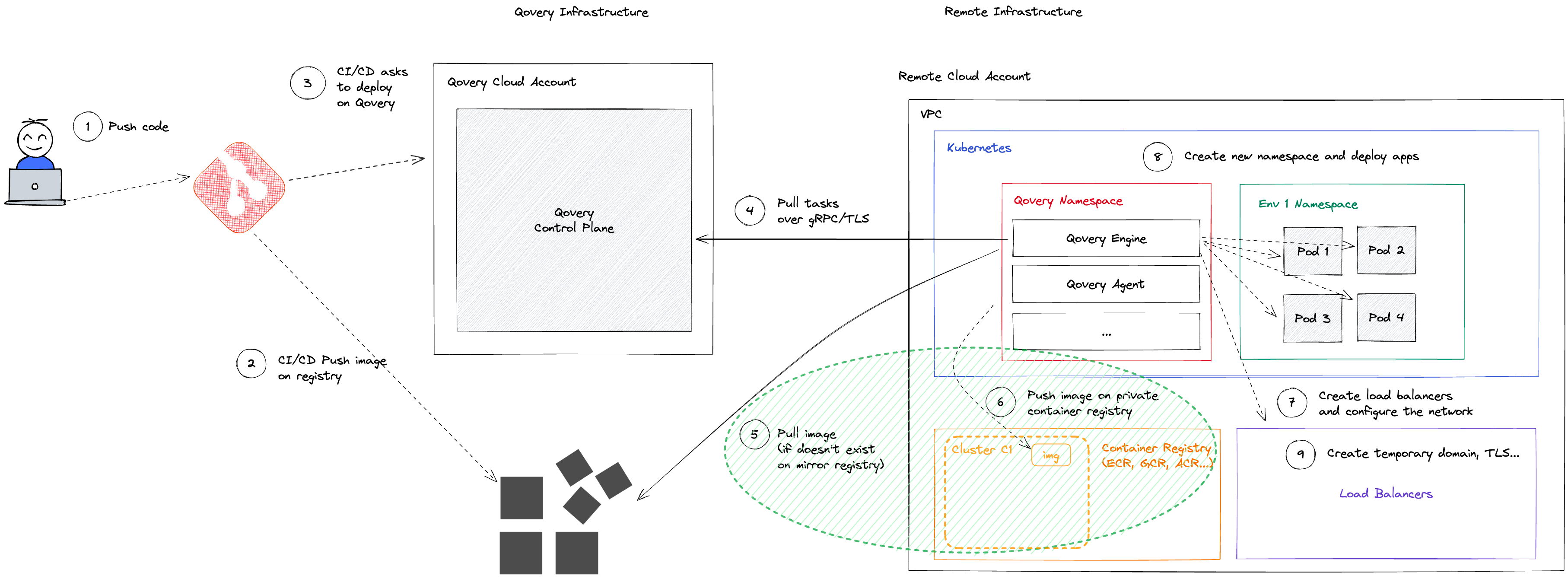

Cluster

Images within the mirroring registry are organized by "Qovery cluster", meaning that the application deployed on the same cluster are all mirrored on the same repository.

At the beginning of the deployment of the application A1, Qovery checks within mirroring registry at the repository of the cluster C1 if an image with the same image name and tag exists.

If the image already exists, the mirroring process is skipped and Qovery starts the deployment of that image on the Kubernetes cluster.

Otherwise, the image is pulled from the source registry and pushed on the mirroring registry at the repository of the cluster C1.

Pro:

- If the same image is used across environments or service, this setup will avoid to mirror multiple time the same image, increasing the deployment speed.

Cons:

- Qovery can't automatically delete the images mirrored on the mirroring registry. This will increase the cloud provider cost of your image registry since it will store more data. To reduce the amount data stored you can reduce the image TTL via the cluster advanced settings registry.image_retention_time

Why image mirroring is necessary

Image mirroring is a general best practice: you don't want your system to be strictly coupled on a third party.

Let's say that you run an application on your production environment and Kubernetes needs to pull again the image to spawn a new instance for the application. In this case, you don't want to make this fail due to the unavailability of your source container registry. This is why we make sure that a copy is always available on the container registry next to the Kubernetes cluster.

Why unique image tags are necessary

When working with containerized applications, it is crucial to employ unique image tags for precise version management. This practice ensures complete confidence in the version running within a container. Failing to use unique image tags can lead to adverse consequences due to the image caching mechanisms employed by both the Qovery mirroring system and Kubernetes:

- Mirroring Registry: Qovery’s mirroring system stores images in a registry. If an image tag remains the same between two versions, the new version will not be mirrored. Consequently, the new version will not be deployed, affecting the overall application.

- Kubernetes: Applications deployed by Qovery on Kubernetes adhere to the “ifNotPresent” image pull policy. This policy means that if the image already exists on the Kubernetes node’s local disk, Kubernetes will not attempt to pull it again. However, if the image tag remains unchanged, the new image version will not be fetched, resulting in your pods running the outdated application code.

In summary, maintaining unique image tags is a critical aspect of effective version control and ensuring that your applications run the intended versions without disruptions caused by caching mechanisms.

Disabling the mirroring

If you want to reduce the deployment time by avoiding the mirroring operation, you can push your built images directly into the Mirroring registry.

Push the images in a image registry repository having the same name of the image you want to deploy.

Also, if the container registry source url (from a service) is the same than the cluster container registry url, no mirroring would be done.

Example: if the source registry is https://xxx.dkr.ecr.us-west-2.amazonaws.com and the cluster container registry url is https://xxx.dkr.ecr.us-west-2.amazonaws.com, no mirroring would be done.

Example on AWS

Let's say you have a container image called nginx that you build on your CI and the container registry associated with your cluster is https://32432542.dkr.ecr.eu-west-3.amazonaws.com.

You can push this image on the mirroring registry within the repository nginx, avoiding the mirroring operation: https://32432542.dkr.ecr.eu-west-3.amazonaws.com/nginx

Image retention policy

Depending on the cloud provider, you can define how long an image should be kept in your registry via a TTL (Time To Live). You can configure the TTL via the advanced settings image_retention_time

Please keep in mind that changing this settings will only affect new ECR repositories created after the change. Existing repositories will not be affected.